Did I unplug the iron?

One of my recurring concerns when leaving home is whether I’ve turned off the iron. When purchasing an iron, I specifically chose one with auto shut-off features that activate when the device remains in a certain orientation for more than a few minutes. This provides some peace of mind, but the worry still lingers. If you don’t already have an iron with three-way shut-off capability, I highly recommend investing in one.

If you have a smart home setup, this problem could be addressed using a smart switch. You could plug the iron into a smart switch and verify remotely whether it’s turned off or on. You could even set up automations to control the switch remotely. There might even be smart irons in the market now too! There are smart fridges, dishwashers, microwaves… so why not smart irons?

But could we solve this problem by just using an LLM and asking it questions? Multi-modal LLMs could be used to solve this problem using their vision capabilities. You could simply take a photo of your room from an existing security camera and ask the LLM questions about the status of your iron. The nice thing about this approach is that your automation isn’t limited to a specific appliance - you can basically ask all sorts of questions about objects in the photo.

Experimenting with Visual LLM Capabilities

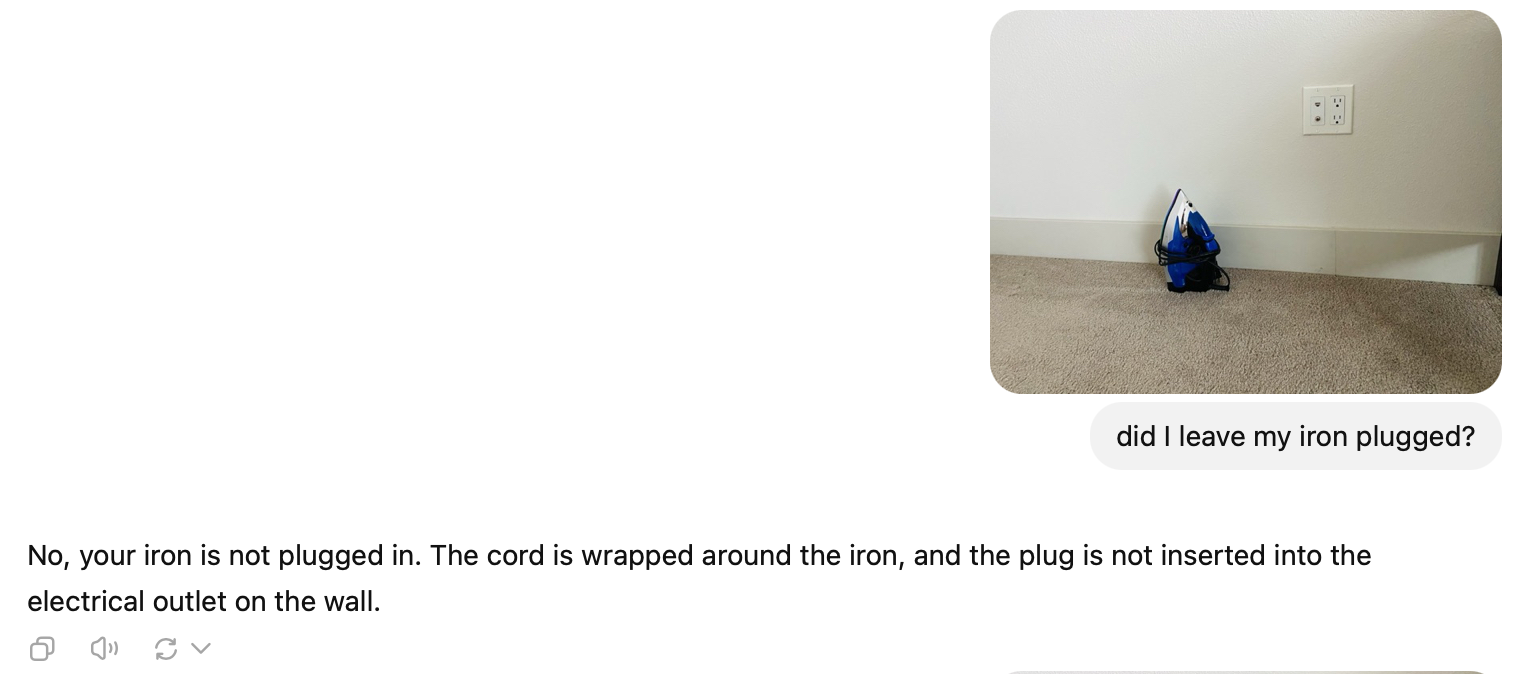

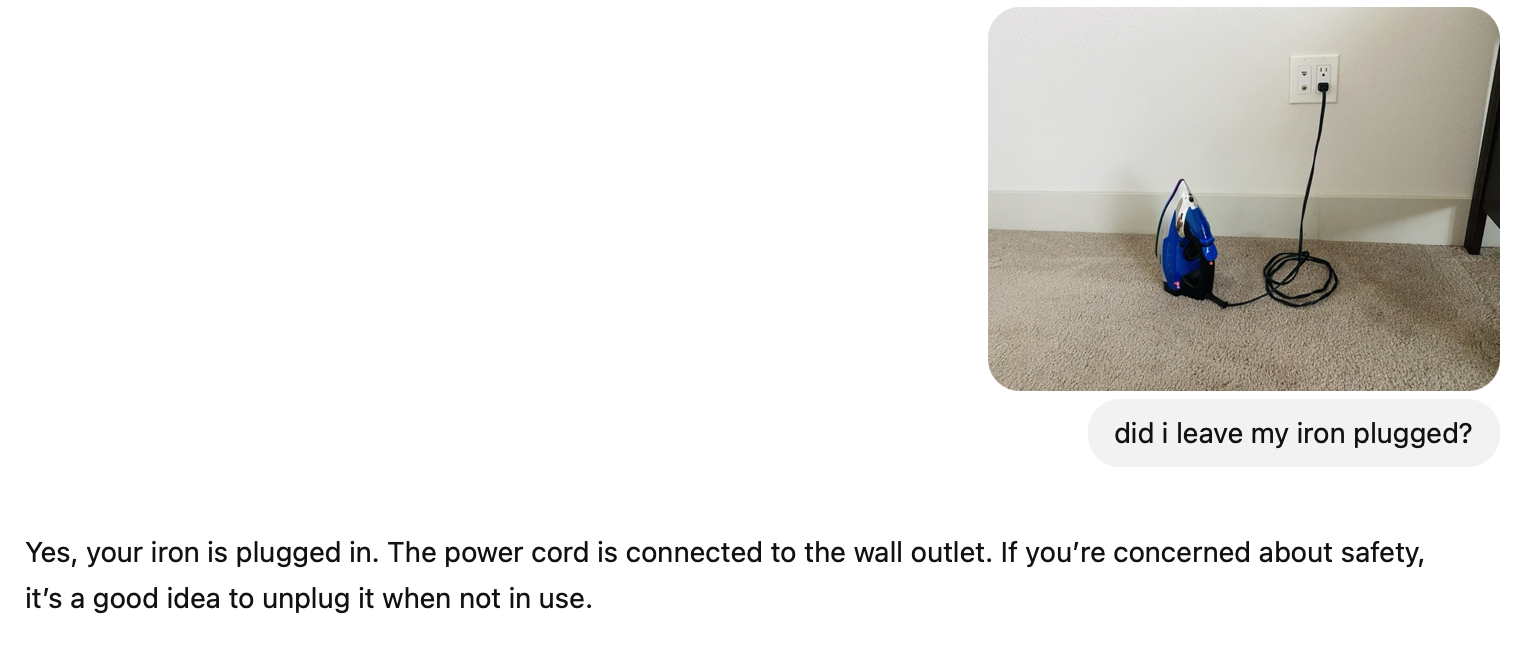

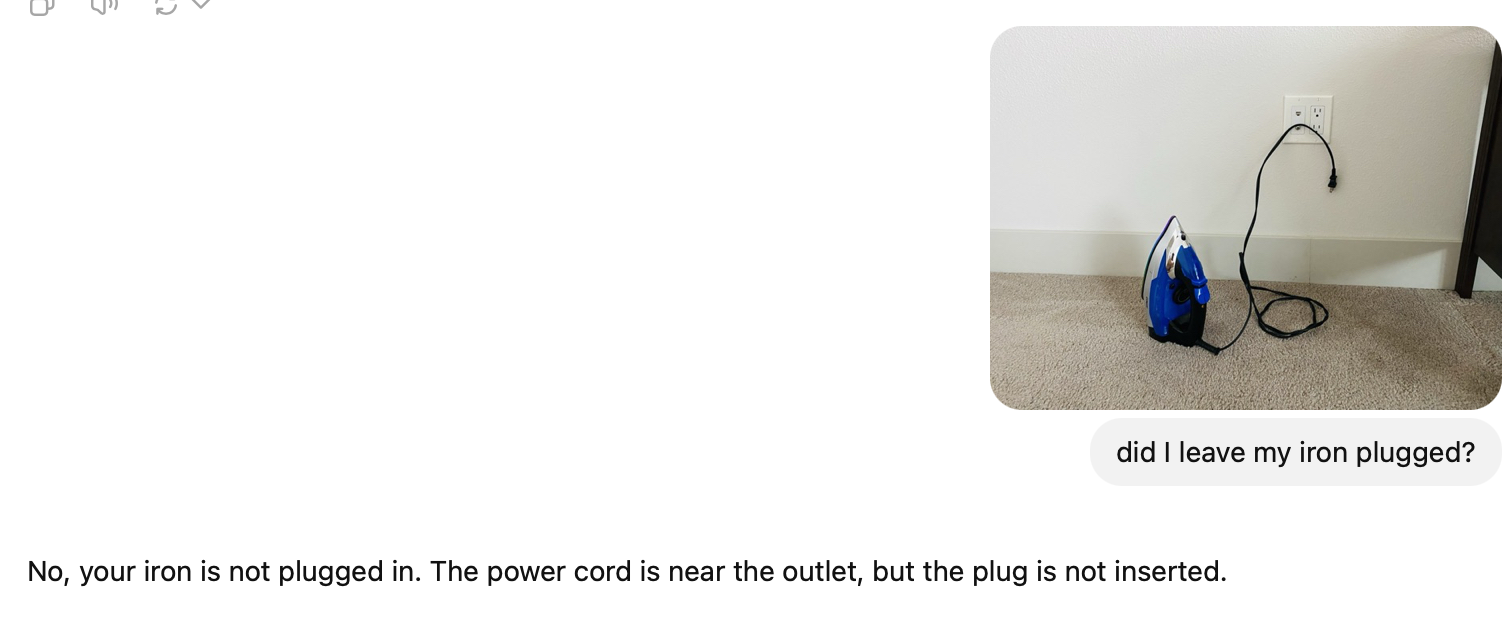

I decided to test this concept by taking photos of my home and using GPT-4o to answer questions about my space while I was away.

In these basic examples, the GPT-4o is getting it right each time!

Integration with Home Assistant

One easy way to leverage this feature would be to use Home Assistant.

I’ve been experimenting with various smart home setups and protocols including Zigbee, Z-Wave, HomeKit, Thread, Matter, standard WiFi, and others. Home Assistant provides the flexibility to test different approaches and build hybrid smart home systems that aren’t tied to a single ecosystem.

Home Assistant has a bunch of automation options including the ability to create and run scripts. This means your smart home can execute specific actions based on certain triggers and respond accordingly. These automations could easily now incorporate multi-modal LLMs.

For example, the Python Scripts integration enables users to run Python scripts as actions for automations.

Would you really need smart cameras next year?

The vision capabilities of multi-modal LLMs mean you don’t necessarily need specialized smart cameras to detect specific events or conditions. Features like face recognition or, say, fire detection in cameras require continuous processing of video frames, which can get expensive if you’re sending each frame or every ‘x’ frame to an LLM over the internet.

As language models become more affordable and efficient, they could run directly on local devices, simplifying many smart home functionalities. This would eliminate the need for specialized smart cameras in some cases. Instead, basic inexpensive cameras connected to a hub running a lightweight multi-modal language model could handle the processing requirements effectively.

Expanding to additional use cases

Passing that photo to the LLM means that we can use it for a variety of purposes. For instance, asking the assistant or smart home:

- “Is my white bottle in my bedroom?” or

- “I can’t find my white bottle, can you check all the rooms and locate it?” or

- “Did Fido finish eating?”

Open Questions

One obvious question that comes to mind is how well would this approach work when there are numerous objects in the room and, perhaps, duplicates? But the pace at which LLMs have been improving suggests that all of these challenges would become very manageable very soon.

I’ll be incorporating some of these vision capability in my Home Assistant setup and see how it works in the real world. Would start with on-demand questions instead of sending a bunch of frames to ‘detect’ certain things.